Welcome to the final step (but it’s not for sure) of our exploration into large language models world. Yes, we’re not done yet 😁

In the previous episodes: we discussed generative AI and LLMs — a new trend of spelling and grammar checkers —and studied the art of prompt engineering.

This time, our spotlight is on sharing expertise in training multilingual LLMs — Claude and Jurassic. Specifically, we’ll dive into tuning their parameters: adjusting temperature, top P, top K, and more. These nuances play a pivotal role in prompt engineering, ensuring optimal performance during text rewrite and content generation.

Whether you’re shaping the future of text-based applications and feel free to leave the comments below.

Choosing a multilingual LLM

We suppose you know a thing or two about large language models for English and how to play with them using prompts. If not, you’re welcome to read Part 1 and Part 2 of this series before you proceed further.

Today we’ll be talking about multilingual LLMs. Multilingual LLMs are trained on massive amounts of text in multiple languages and can understand, process, and produce human language in different languages.

They have demonstrated remarkable potential in handling multilingual machine translation (MMT).

In fact, a recent study has shown that LLMs’ translation capabilities are continually improving, and they can even generate moderate translations on zero-resource languages (those which are just over 1% of those actively spoken globally).

However, there are still some challenges that need to be addressed. For instance, LLMs face a large gap towards commercial translation systems, especially in the case of low-resource languages. Additionally, some LLMs are English-dominant and support only dozens of popular natural languages.

Despite these challenges, LLMs are a promising area of research in natural language processing (NLP) and are expected to play a significant role in the future of language processing.

There are many multilingual LLMs over there and when selecting one for testing and development purposes, there are several things to take into consideration.

We’re going to share our own experience. When selecting a multilingual language model (LLM) on Amazon Bedrock, we considered several factors such as pricing, licensing, use cases, model quality, model size, and model compatibility.

Pricing: Bedrock offers two pricing plans for inference: On-Demand and Provisioned Throughput.

With the On-Demand mode, you only pay for what you use, with no time-based term commitments. For text generation models, you are charged for every input token processed and every output token generated. A token is comprised of a few characters and refers to the basic unit of text that a model learns to understand the user input and prompt.

Custom models can only be accessed using Provisioned Throughput. A model unit provides a certain throughput, which is measured by the maximum number of input or output tokens processed per minute. With the Provisioned Throughput pricing, you are charged by the hour, and you have the flexibility to choose between 1-month or 6-month commitment terms.

Licensing: Different LLMs on Bedrock may have different licensing agreements. For example, Bloom is licensed under the bigscience-bloom-rail-1.0 license, which restricts it from being used for certain use cases like giving medical advice and medical results interpretation. Claude and Jurassic are both proprietary models, and their licensing agreements are publicly available on Bedrock. It’s important to review the licensing agreements of each LLM before making a selection to understand possible limitations of AI services.

Use cases: Different LLMs may be better suited for different use cases. For example, Jurassic-2 Ultra is AI21’s most powerful model for complex tasks that require advanced text generation and comprehension. Popular use cases include question answering, summarization, long-form copy generation, advanced information extraction, and more. Claude 1.2-1.3 are Anthropic’s general purpose large language models designed for question answering, information extraction, removing PII, content generation, multiple choice classification, roleplay, comparing text, summarization, document Q&A with citation.

Model type. On Bedrock, there are different types of models: text-based and chat-based ones. The primary distinction between LLMs and Chat models lies in their input and output structure. LLMs operate on a text-based input and output, while Chat models follow a message-based input and output system.

Model quality and size: The quality of the LLM is an important factor to consider. You should look for models that have been trained on large and diverse datasets, as these models are more likely to produce high-quality results. Larger models tend to perform better, but they also require more computational resources to run. You should consider the size of the model in relation to your specific use case and the resources you have available.

According to Anthropic, Claude 1.3 is a large language model with an input size of 100k tokens. On the other hand, Claude 1.2 is a smaller model called Claude Instant 1.2 which has an input size of 2048 tokens.

The Jurassic-2 Ultra is the largest and most powerful model in the Jurassic series, ideal for the most complex language processing tasks and generative text applications.

Model compatibility: You should also consider the compatibility of the LLM with your specific application or platform. Some LLMs may be better suited for certain applications or platforms than others. You should review the documentation for each LLM to ensure that it is compatible with your specific use case.

In addition to these factors, you should also consider other important things such as the model availability, projected scale of your operation, available computational capacities, and the source and state of enterprise data which you will integrate with and ultimately use the LLM for.

For example, Claude 1.3 is a legacy model version and will be unavailable on Feb 28, 2024 on the Amazon AWS —this fact made us consider another option — Claude 1.2 Instant.

How to tune LLM parameters: temperature, top P, max completion length

Each LLM demo is equipped with model parameters, which can be successfully tweaked to impact the final output. When tuning the behavior of the language model, there are several parameters that can be adjusted to improve its performance. Here are some of the most common parameters:

Temperature is a parameter that controls the “creativity” of the language model. A higher temperature will result in more diverse and unpredictable output, while a lower temperature will result in more conservative and predictable output.

Top P is a sampling strategy that selects the most probable tokens whose cumulative probability exceeds a certain threshold. This strategy is useful for generating text that is both coherent and diverse. Increasing the value of Top P will result in more diverse output, while decreasing it will result in more conservative output.

The max completion length parameter is used to specify the maximum length of the text generated by a language model. It is usually measured in tokens, which are the smallest units of text that a language model can process.

Additional parameters include top K, stop sequences, and penalties. On Bedrock, the LLM demos are sometimes offered with additional parameters, but not always.

Top K is a sampling strategy that selects the top K most probable tokens. This strategy is useful for generating text that is both coherent and conservative. Decreasing the value of top K may result in more conservative output, while increasing it may result in more diverse output. But the correlation isn’t always linear. When the parameter value is small, it can sometimes create diversity due to the limitation of the set of probable tokens, which includes non-obvious ones. As a rule, the higher the value of the model is, the greater the pool of tokens choice the model has. Because of this, the output can sometimes be more diverse.

Stop sequences are sequences of tokens that, when generated by the language model, indicate that the text generation process should stop. Stop sequences can be used to ensure that the language model generates text that is relevant to a specific topic or context. Adding more stop sequences will result in more relevant output, while removing them will result in more diverse output.

Penalties are used to discourage the language model from generating certain types of output. For example, a repetition penalty can be used to discourage the language model from generating the same token multiple times in a row. Other penalties can be used to discourage the language model from generating offensive or inappropriate content. Increasing the value of penalties will result in more conservative output, while decreasing it will result in more diverse output.

By adjusting these parameters, you can tune the behavior of the language model to make sure the generated text is more relevant, coherent, and diverse. However, it’s important to note that these parameters can interact with each other in complex ways, and finding the optimal combination of parameters can be a challenging task.

During the testing stage, we played with these parameters and can safely state that they do impact the final output. How? Read further to discover.

Testing multilingual LLMs Claude 1.3 and 1.2 and Jurassic-2 Ultra

We’ve tested Claude 1.3 and 1.2 (Instant) as well as Jurassic Ultra in three languages — German, Spanish and French, on different prompts for operations with texts: text shortening, text expansion, text rewrite, style improvement, style shift from formal to informal and vice versa, text summarization and proofreading.

We made hundreds of attempts and it’s literally impossible to showcase all them here. So, we’ll give you an example of one iteration and the outcome we got by changing the Jurassic-2 Ultra parameters.

We used a common business email as an input text:

“Ich hoffe, diese Nachricht erreicht Sie in gutem Zustand.

Ich schreibe Ihnen, um bezüglich unserer jüngsten Zusammenarbeit den Kontakt zu halten und mögliche nächste Schritte zu besprechen. Das erste Feedback unseres Teams war überwältigend positiv, und wir sind bestrebt, Wege zu finden, unsere erfolgreiche Partnerschaft fortzusetzen.

Könnten wir für nächste Woche ein Telefonat vereinbaren, um dies detaillierter zu besprechen? Bitte teilen Sie mir Ihre Verfügbarkeit mit, und ich werde sicherstellen, dass ein für Sie passender Zeitpunkt gebucht wird.

Ich danke Ihnen nochmals für Ihre Hingabe und Ihre harte Arbeit. Ich freue mich auf unsere fortgesetzte Zusammenarbeit und den gemeinsamen Erfolg, den sie uns bringen wird.

Mit freundlichen Grüßen,”

The prompt for text shortening operation:

## Rolle

Bitte fungieren Sie als menschlicher Redakteur.

## Aufgabe

Kürzen Sie den Text.

## Richtlinien

1. **Stil**

Kürzen Sie den folgenden Text, während Sie seine wesentliche Bedeutung, Klarheit und grammatikalische Korrektheit wahren. Konzentrieren Sie sich auf Prägnanz, Relevanz und Klarheit Ihrer Überarbeitung.

2. **Umfang**

Vermeiden Sie es, den Text übermäßig zu komprimieren (optimal — 10-30%), sodass wichtige Informationen verloren gehen oder die Botschaft unklar oder unvollständig wird.

3. **Formalität**

Die ursprüngliche Formalität des Textes muss strikt bewahrt werden.

4. **Sprachvalidierung**

Doppelprüfung, um sicherzustellen, dass die Sprache der Ausgabe Deutsch ist.

5. **Format**

Geben Sie ausschließlich den überarbeiteten Text in der Sprache des Original textes ohne zusätzliche Erklärungen an.

### Zu kürzender

“TEXTE”

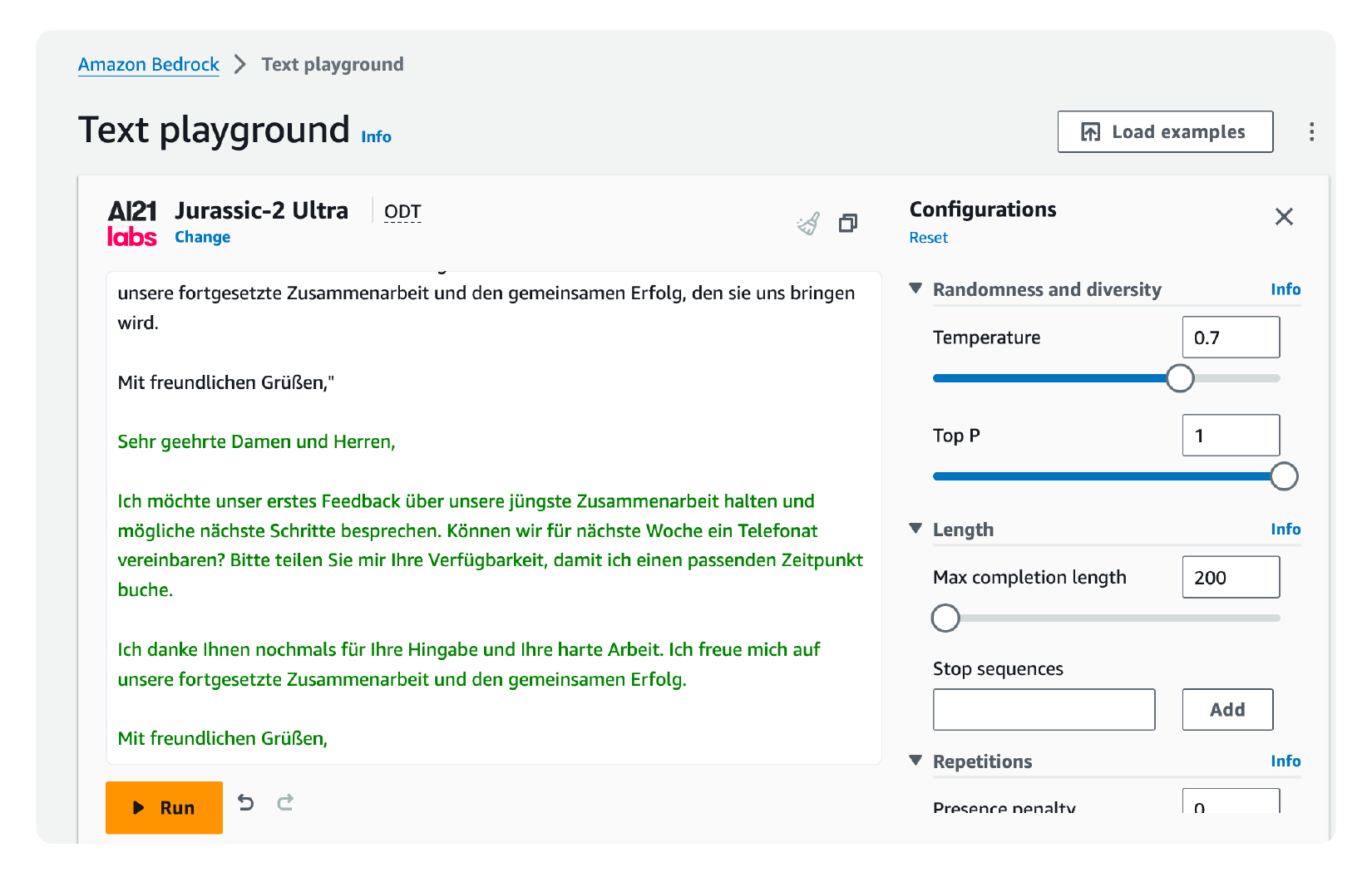

Default Jurassic-2 Ultra parameters: Temperature – 0.7, Top P – 1, Max completion length – 240.

Iteration #1

Let’s increase the default temperature from 0.7 to 0.8, the top P—from 1 to 0.8, and the completion length — up to 8191.

The output:

“Hallo,

Ich möchte unser aktuelles Projekt besprechen. Können wir ein Telefonat für nächste Woche vereinbaren?

Viele Grüße,”

So, you see that increasing the temperature and top P leads to a more diverse output and the LLM completely ignored the size requirement from the prompt cutting the size of the by 80-90%.

Iteration #2

This time we will increase the temperature up to 1 and limit the output to the recommended default size — 240. Top P parameter will remain as 0.8.

The output:

“Sehr geehrte Damen und Herren,

ich möchte den Kontakt zu halten und über mögliche nächste Schritte nach unserer jüngsten Zusammenarbeit besprechen. Das erste Feedback unseres Teams war überwältigend positiv und wir sind bestrebt, unsere erfolgreiche Partnerschaft fortzusetzen. Könnten wir für nächste Woche ein Telefonat vereinbaren, um dies detaillierter zu besprechen? Bitte teilen Sie mir Ihre Verfügbarkeit mit, und ich werde sicherstellen, dass ein für Sie passender Zeitpunkt gebucht wird.

Ich danke Ihnen nochmals für Ihre Hingabe und Ihre harte Arbeit. Ich freue mich auf unsere fortgesetzte Zusammenarbeit und den gemeinsamen Erfolg, den sie uns bringen wird.

Mit freundlichen Grüßen,

[Your Name]”

As far as we can see, the LLM again ignored the size requirement. The updated text is almost the same size as the original one and, additionally, the degree of formality was increased as well. The output we received feels much more formal than the input.

Basically, in the case of Jurassic, tweaking LLM parameters didn’t improve the outcome, vice versa, the output just got worse. So, for the text compression operation, we got back to the Jurassic default parameters and received this output:

The output:

“Sehr geehrte Damen und Herren,

Ich möchte unser erfolgreiches Engagement fortsetzen und möchte Sie höflich um eine Verbindung bitten. Können wir für nächste Woche ein Telefonat vereinbaren, um dieses Thema in weiterer Einzelheit zu besprechen? Bitte teilen Sie mir Ihre Verfügbarkeit mit, und ich werde sicherstellen, dass ein für Sie passender Zeitpunkt gebucht wird.

Herr/Frau [Name]

Wir danken Ihnen für Ihre Einsatzbereitschaft und Ihre herausragenden Leistungen. Wir freuen uns auf eine weitere gemeinsame Zusammenarbeit.

Mit freundlichen Grüßen,

[Name]”

The text size is cut by 10-30%, the formality of the new text gravitation more towards professional writing rather than casual, but this is not critical.

We discovered different outcomes of parameters tuning on different text operations and can safely say that, in the majority of times, increasing/decreasing them does work. So, don’t be afraid of experiments.

WProofreader AI writing assistant

Rewrite and text generation for business and individual users. Contact us.

Multilingual WProofreader AI writing assistant

Slowly we’re moving to the most important part of this blog post — a usual promo of WProofreader AI writing assistant 😁

Typically, here we’re singing praises to our core product — WProofreader, so brace yourself or just skip this part. But we strongly advise you to install our free WProofreader extension for instant spelling and grammar check.

In a nutshell, what is WProofreader AI writing assistant?

The WProofreader AI multilingual writing assistant will be a top-notch feature inside the WProofreader SDK and our browser extension for text rewrite and content generation.

What sets our AI writing assistant apart from the rest is its multilingual nature and capability to be deployed within client infrastructures. The initial release, as an MVP, will encompass prompts for text shortening, expansion, rewriting, style improvement, and style switching (from formal to informal and vice versa) and is scheduled for this spring.

Looking ahead, we plan to expand the range of predefined prompts and empower users to create their own personalized prompts.

Crucially, the WProofreader AI writing assistant will adhere to the principles of responsible AI, emphasizing ethical considerations, fairness, transparency, and accountability.

On a side note

So, summing up our exploration into language models and parameter tuning:

- We’ve delved into the nuances of adjusting parameters like temperature, top P, and max completion length in multilingual LLMs on Bedrock, unlocking their potential for diverse and coherent text generation. Our experiments revealed the crucial impact of parameter tweaks on text operations such as shortening, expansion, rewriting, and style switching on the final output.

- When choosing a multilingual LLM on Bedrock we paid attention to a number of factors, including pricing, licensing, use cases, size and compatibility, etc.

- Looking ahead, WProofreader AI writing assistant promises a cool new rewrite/generative AI functionality, boasting multilingual, secure, and responsible features. Anticipate text shortening, expansion, rewrite, style improvement, and a dynamic style switch from formal to informal and back. The magic of language continues to evolve — stay tuned for the next chapter!